Overview

Design Brief: Augmenting Urban Experiences

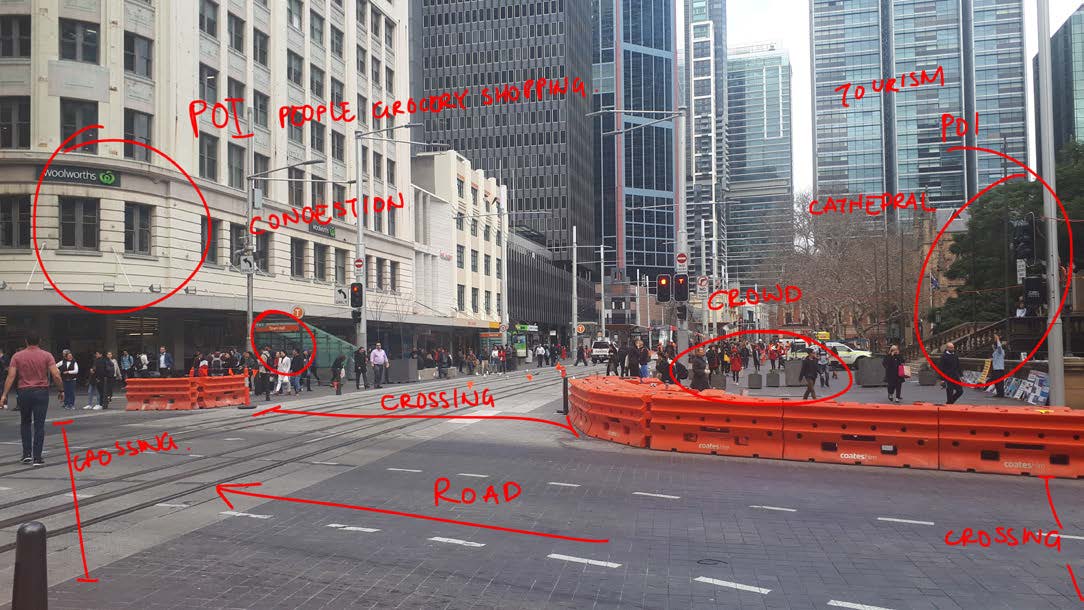

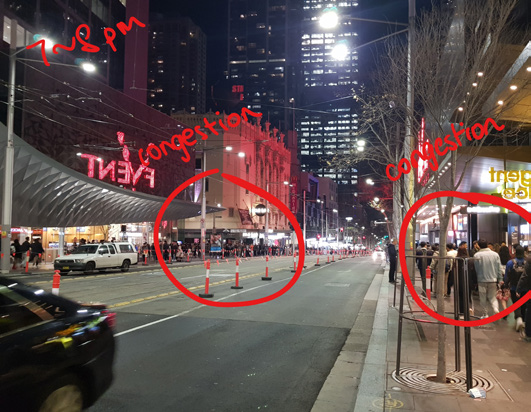

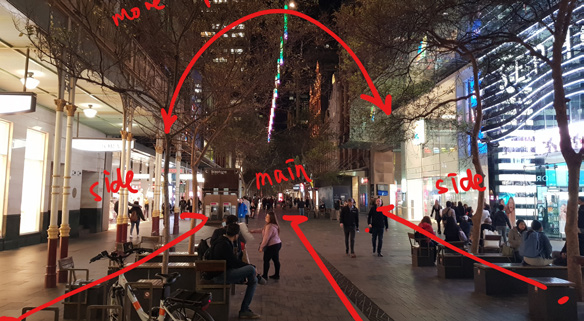

As cities become increasingly urbanised and populated, pedestrian congestion

continues to rise.

Unsustainable numbers of people in these urban environments can lead to a lack

of walkability in cities, as streets dense with foot traffic lead to a more constant

need for repairs as well as safety concerns.

A lack of walk-ability in cities has been

proven to increase stress and anxiety for people, leading to a less approachable

environment for pedestrians and a lack of empathy towards other people on the

street.

About Project Emotus

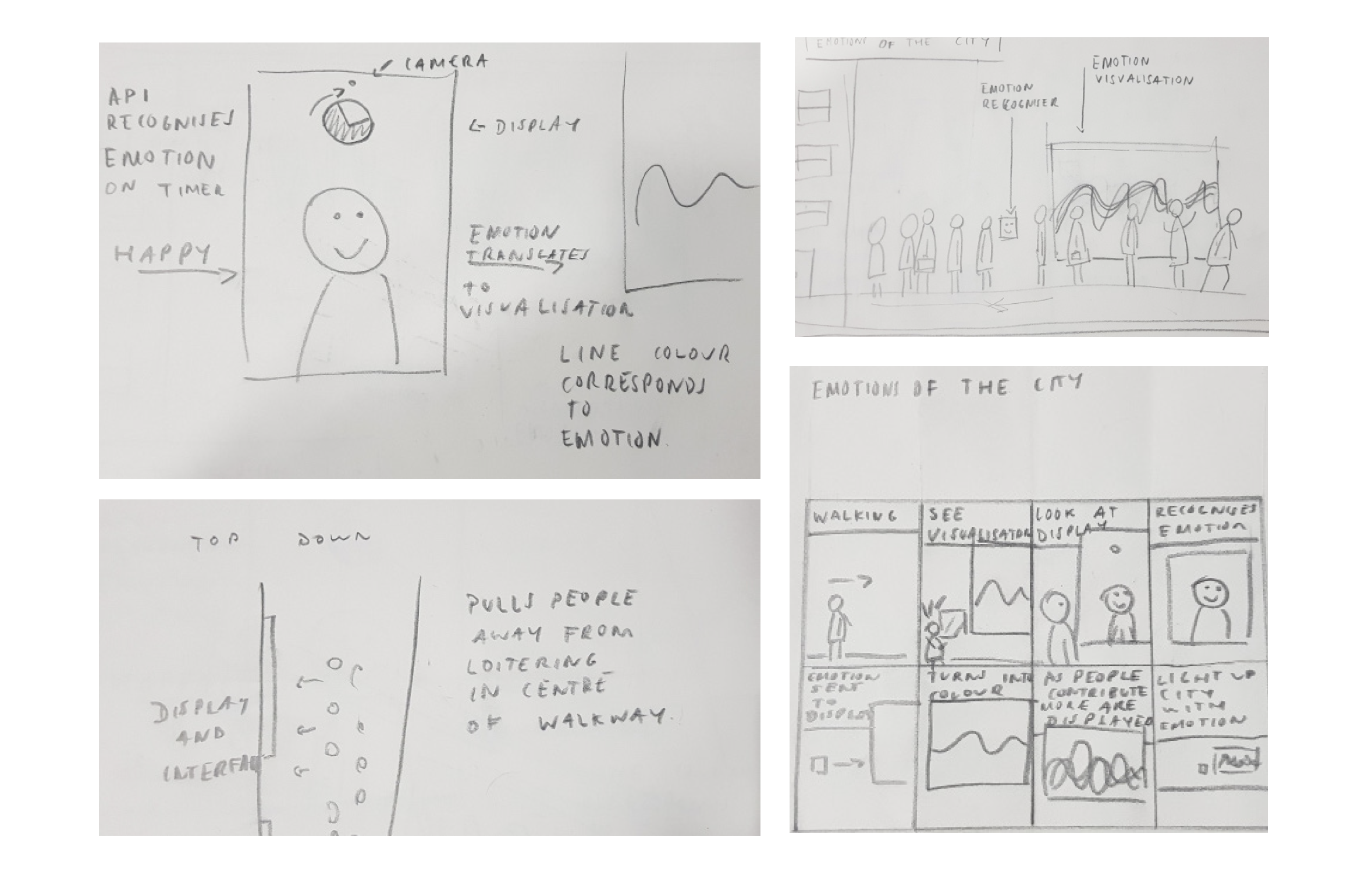

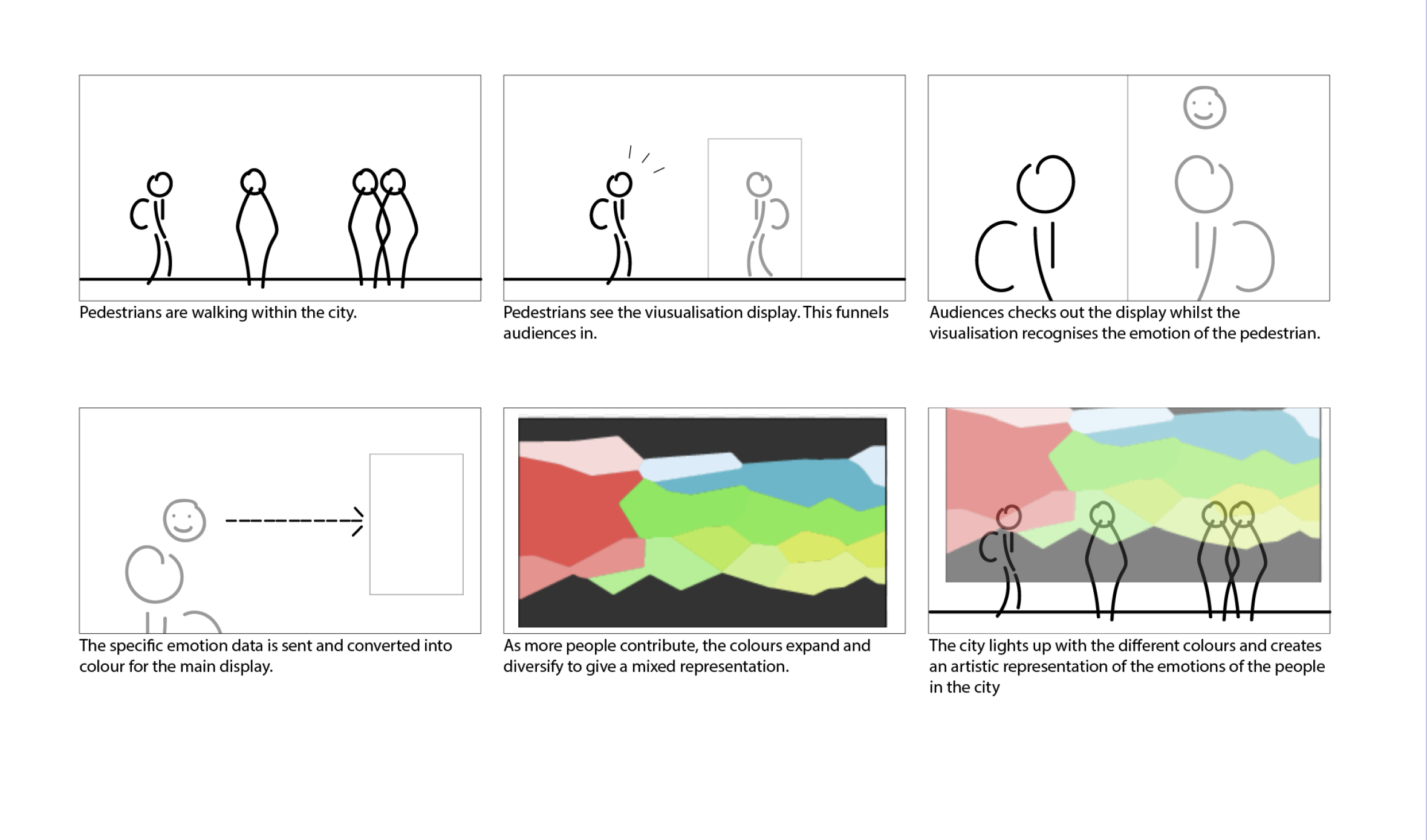

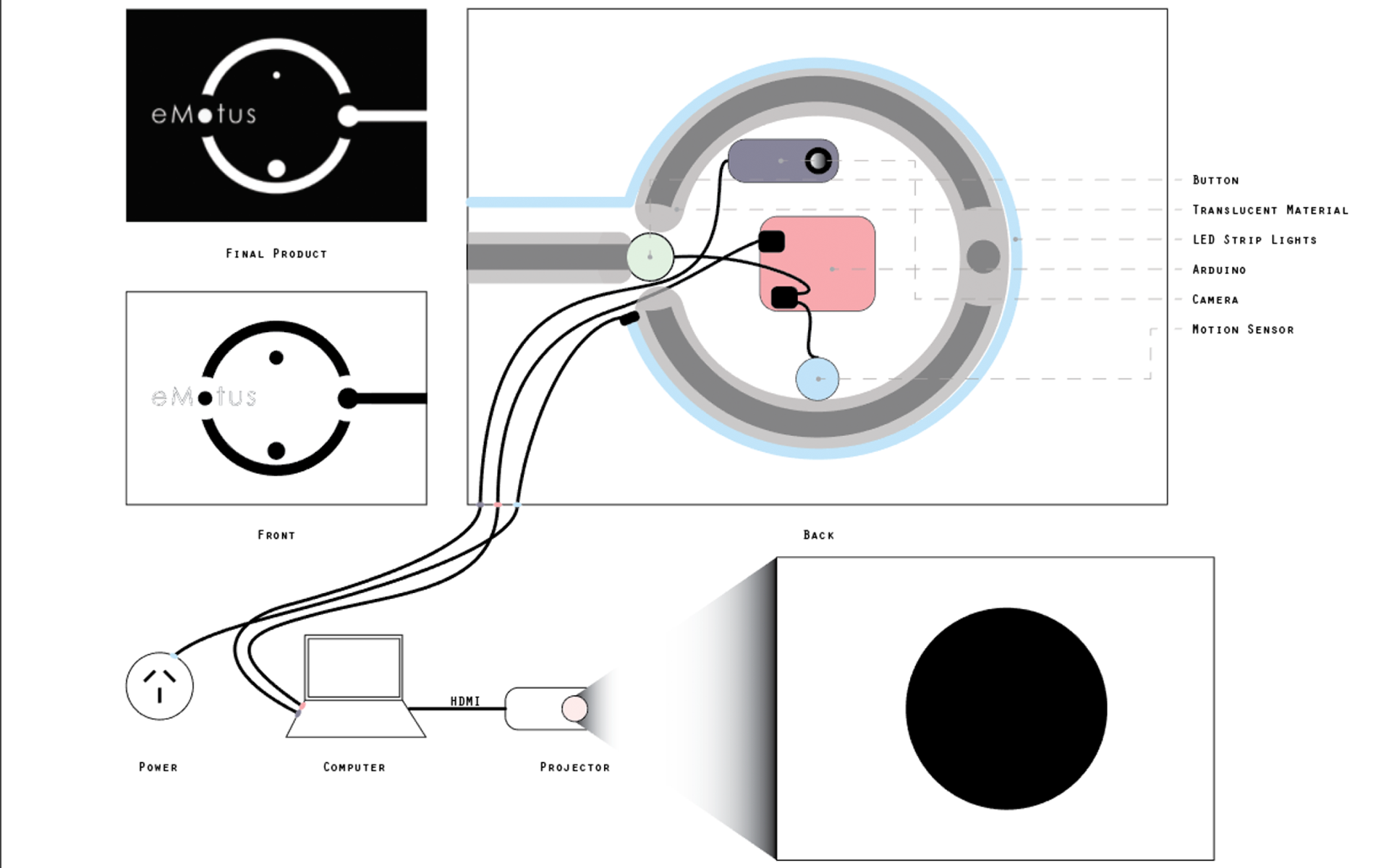

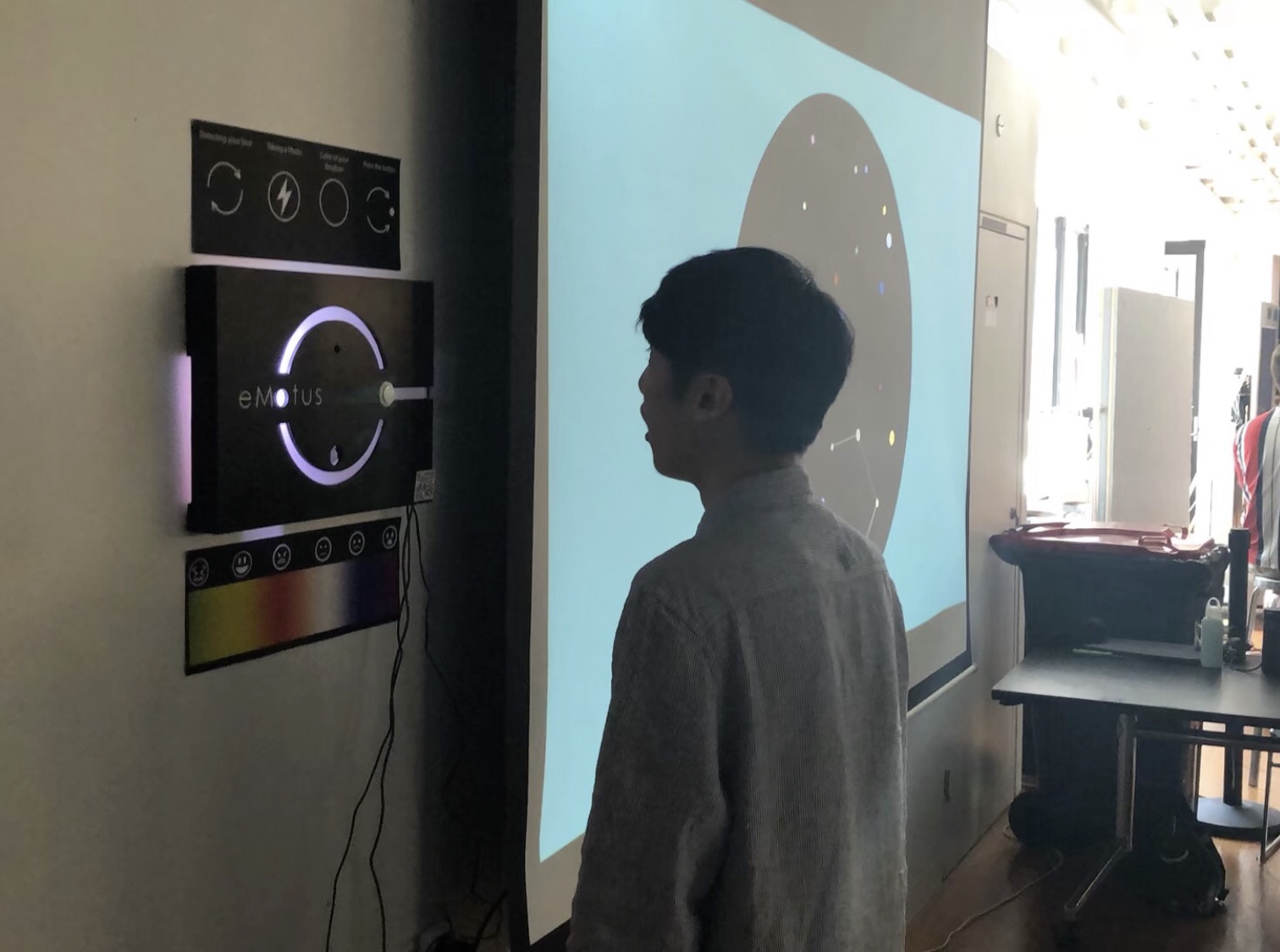

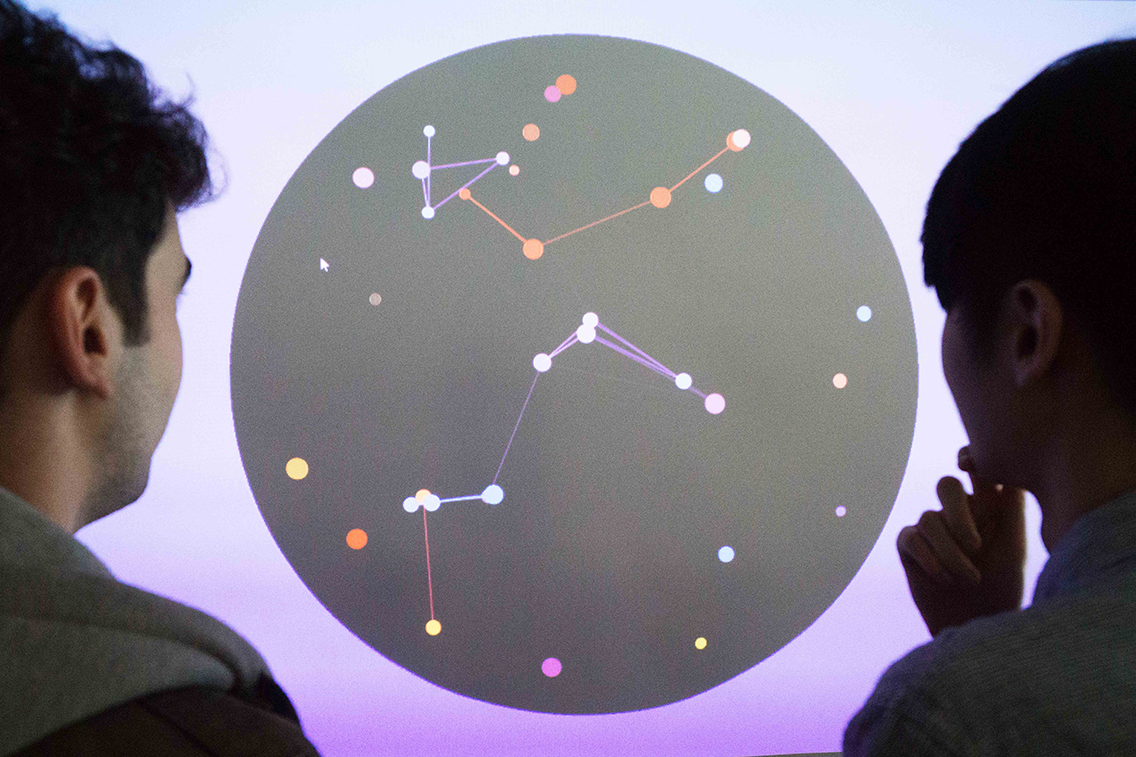

eMotus is an interactive emotion tracking and visualisation system is a method of augmenting urban experiences, to give people a new way to perceive the often unseen information of emotion within these urban landscapes.

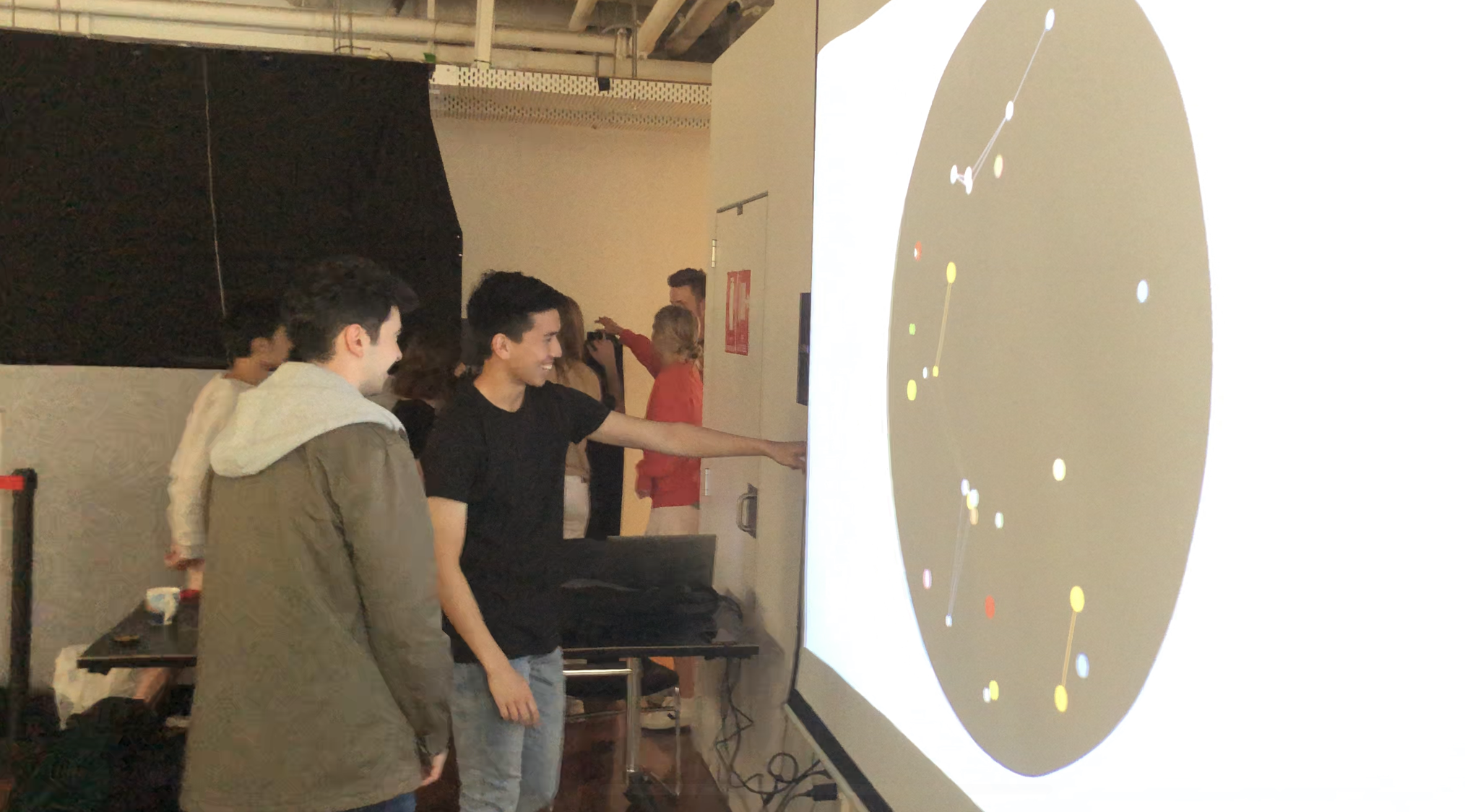

It is an interactive display that detects the emotions of a passerby that engages with it. Using facial recognition API, the emotion of the person interacting is determined and converted into a colour that best represents the data captured. The user is then prompted to use gesture control to contribute their emotion into a large art display of a mosaic of emotions, presenting the way people feel when walking to the public.

Timeline: 13 Weeks - 2019

Role: Researcher, UX/UI Designer, Product Designer, Web Developer and Videographer

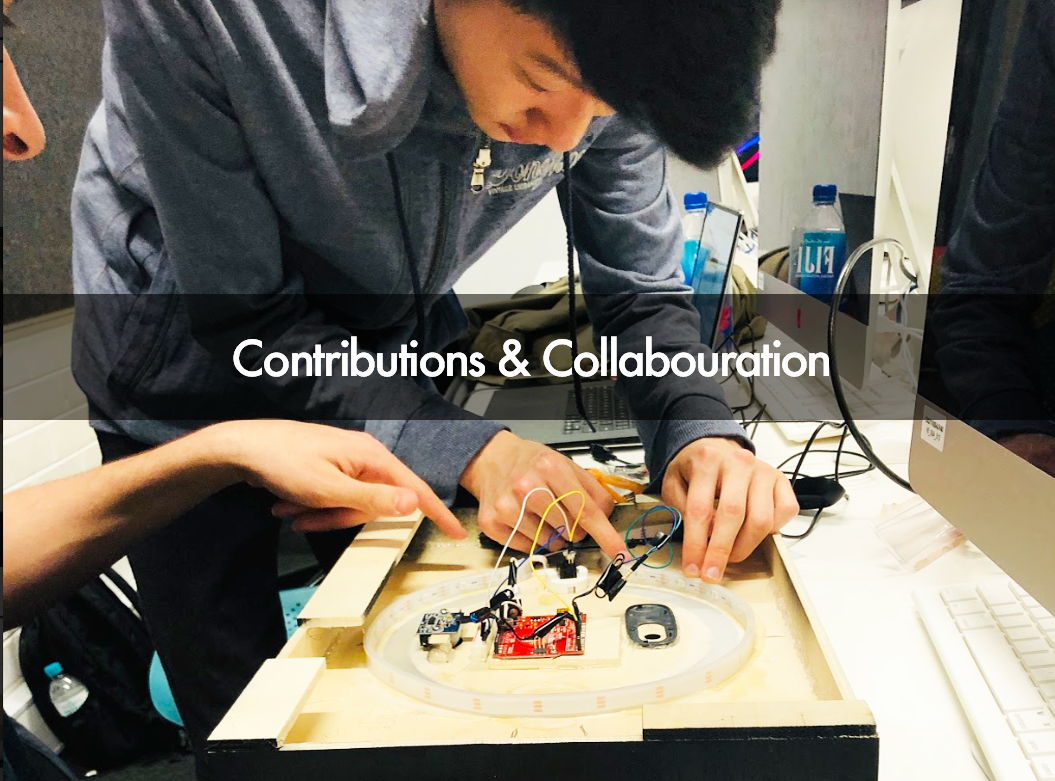

Team: Abhinav Bose, Daniel Lee, Dominic Musolino, Ray Hwang

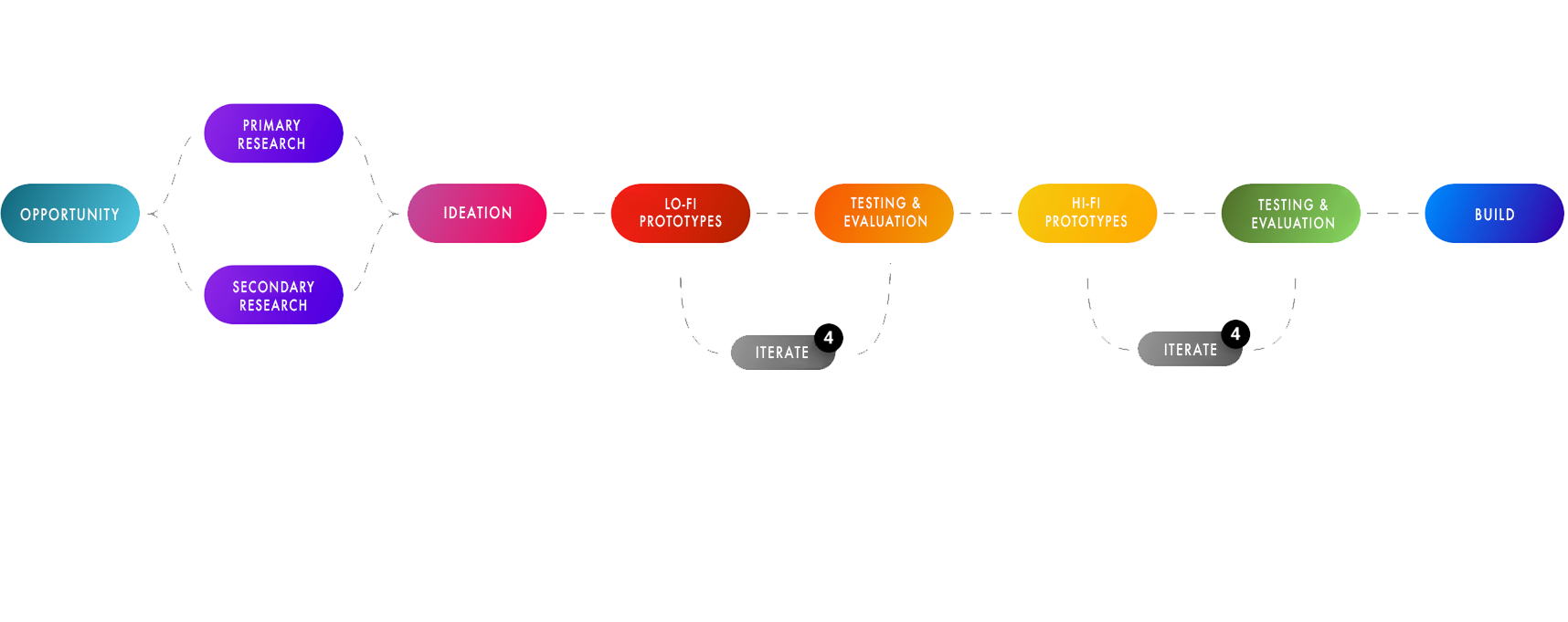

STAGE 1

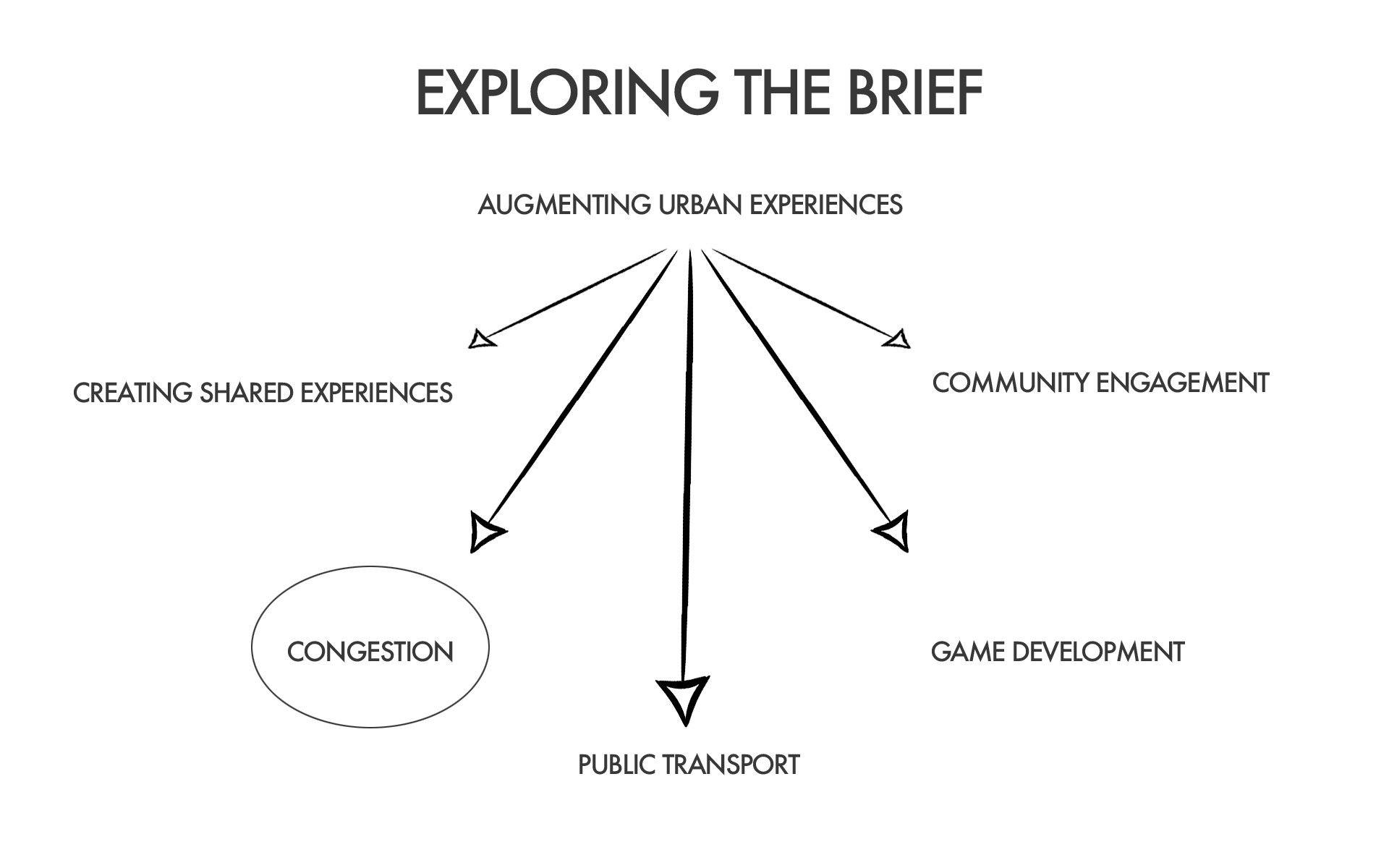

a. Exploring the brief

b. Concreting problem

c. Research

d. Identifying user needs

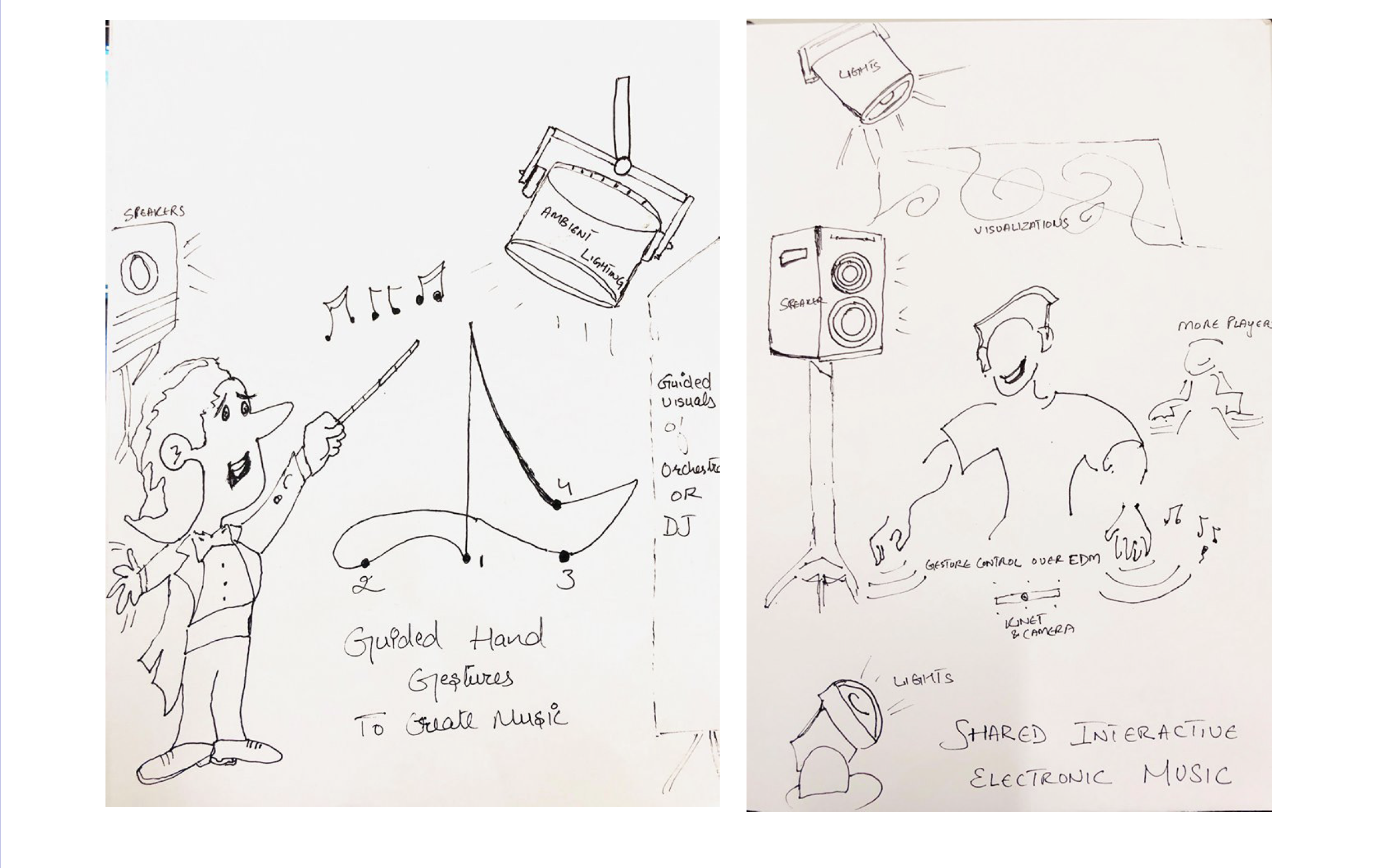

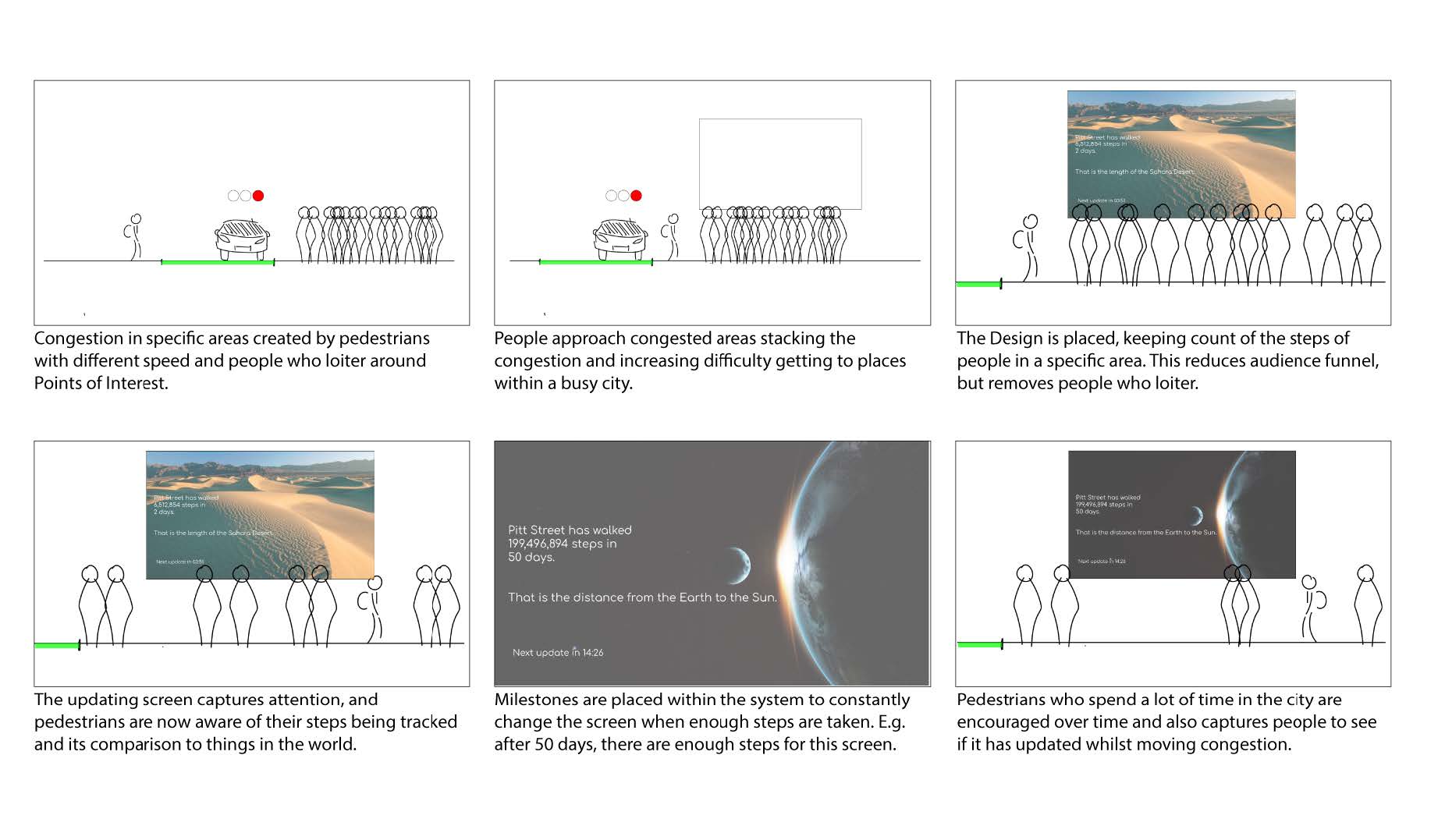

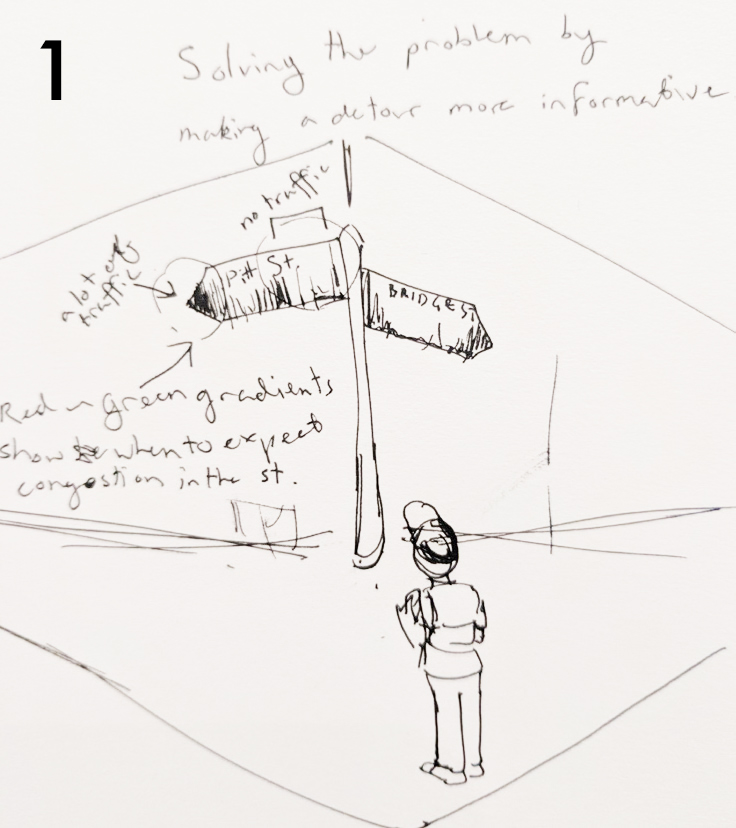

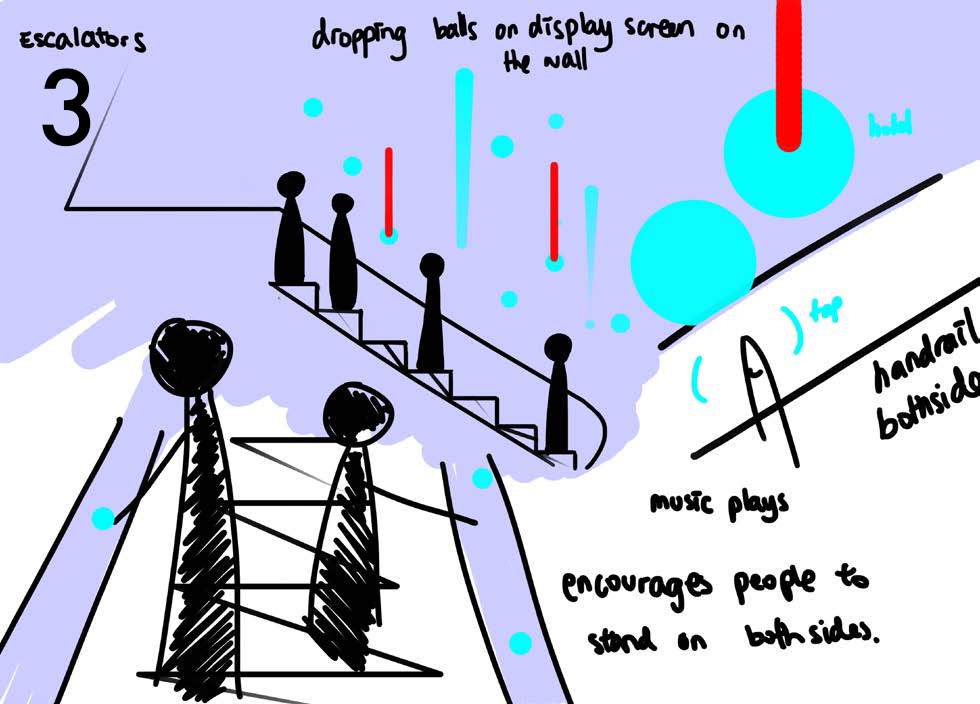

e. Ideation

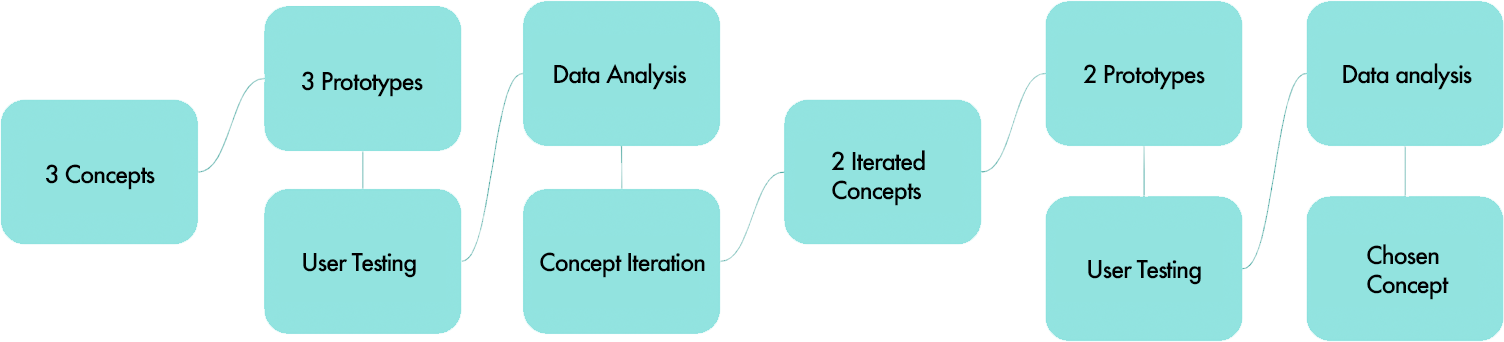

STAGE 2

a. Lo-fi prototype construction

b. User testing 3 concepts

c. Data analysis

d. Iteration of chosen concept

e. User testing

f. Decision matrix

STAGE 3

a. Physical product creation

b. Curating the UX

c. Website development

d. Software development

e. Visual art development

f. Product documentation

g. Exhibition

STAGE 4

a. Summary of project

b. Challenges during process

c. Experience and learnings

STAGE 1

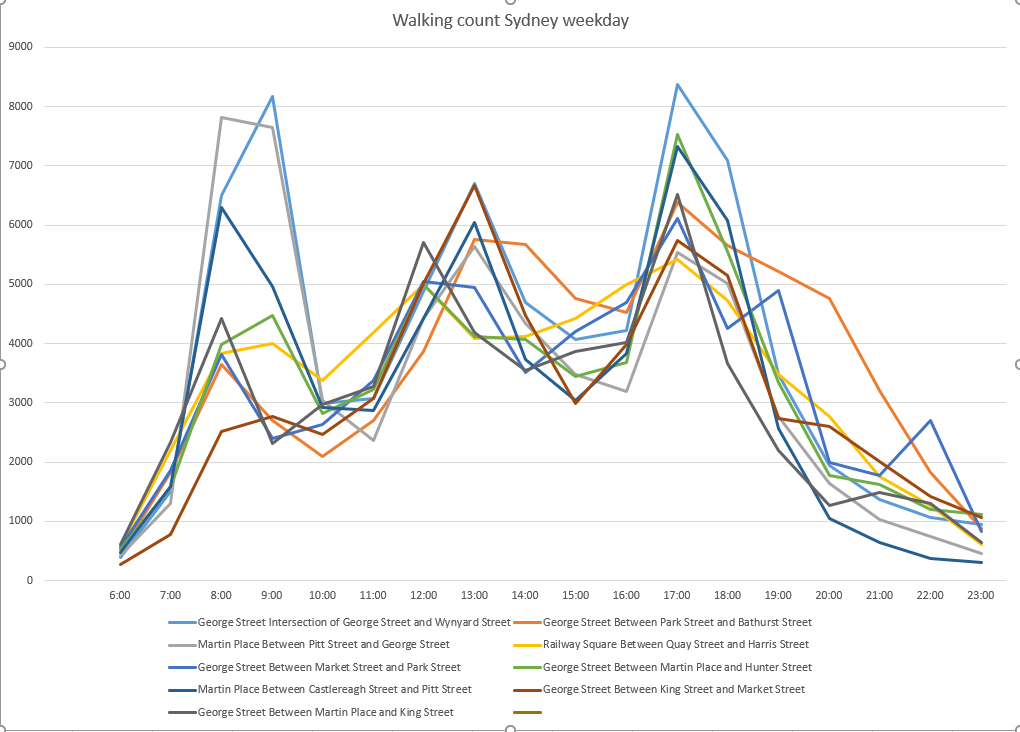

We were amazed by the amount of creative ideas that were running in our head related to this brief. We could tap into any domain and build something with an impact. We explored a couple of options based on things we wanted to improve around us. Finally after some research into different domains we could find the one that the entire team agrees upon. We all saw Pedestrian Congestion as a pressing issue in our city and hence we did further research on it.